Paper Study with DGL

Graph neural networks and its variants

Graph convolutional network (GCN) [research paper] [tutorial] [Pytorch code] [MXNet code]:

Graph attention network (GAT) [research paper] [tutorial] [Pytorch code] [MXNet code]: GAT extends the GCN functionality by deploying multi-head attention among neighborhood of a node. This greatly enhances the capacity and expressiveness of the model.

Relational-GCN [research paper] [tutorial] [Pytorch code] [MXNet code]: Relational-GCN allows multiple edges among two entities of a graph. Edges with distinct relationships are encoded differently.

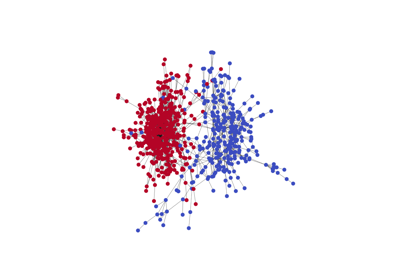

Line graph neural network (LGNN) [research paper] [tutorial] [Pytorch code]: This network focuses on community detection by inspecting graph structures. It uses representations of both the original graph and its line-graph companion. In addition to demonstrating how an algorithm can harness multiple graphs, this implementation shows how you can judiciously mix simple tensor operations and sparse-matrix tensor operations, along with message-passing with DGL.

Batching many small graphs

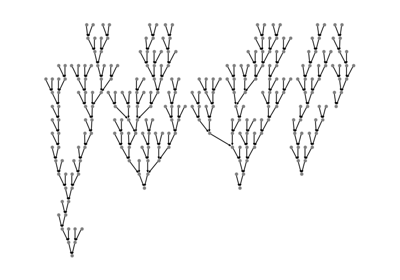

Tree-LSTM [paper] [tutorial] [PyTorch code]: Sentences have inherent structures that are thrown away by treating them simply as sequences. Tree-LSTM is a powerful model that learns the representation by using prior syntactic structures such as a parse-tree. The challenge in training is that simply by padding a sentence to the maximum length no longer works. Trees of different sentences have different sizes and topologies. DGL solves this problem by adding the trees to a bigger container graph, and then using message-passing to explore maximum parallelism. Batching is a key API for this.

Generative models

DGMG [paper] [tutorial] [PyTorch code]: This model belongs to the family that deals with structural generation. Deep generative models of graphs (DGMG) uses a state-machine approach. It is also very challenging because, unlike Tree-LSTM, every sample has a dynamic, probability-driven structure that is not available before training. You can progressively leverage intra- and inter-graph parallelism to steadily improve the performance.

Revisit classic models from a graph perspective

Capsule [paper] [tutorial] [PyTorch code]: This new computer vision model has two key ideas. First, enhancing the feature representation in a vector form (instead of a scalar) called capsule. Second, replacing max-pooling with dynamic routing. The idea of dynamic routing is to integrate a lower level capsule to one or several higher level capsules with non-parametric message-passing. A tutorial shows how the latter can be implemented with DGL APIs.

Transformer [paper] [tutorial] [PyTorch code] and Universal Transformer [paper] [tutorial] [PyTorch code]: These two models replace recurrent neural networks (RNNs) with several layers of multi-head attention to encode and discover structures among tokens of a sentence. These attention mechanisms are similarly formulated as graph operations with message-passing.