Overview of DGL¶

Deep Graph Library (DGL) is a Python package built for easy implementation of graph neural network model family, on top of existing DL frameworks (e.g. PyTorch, MXNet, Gluon etc.).

DGL reduces the implementation of graph neural networks into declaring a set of functions (or modules in PyTorch terminology). In addition, DGL provides:

- Versatile controls over message passing, ranging from low-level operations such as sending along selected edges and receiving on specific nodes, to high-level control such as graph-wide feature updates.

- Transparent speed optimization with automatic batching of computations and sparse matrix multiplication.

- Seamless integration with existing deep learning frameworks.

- Easy and friendly interfaces for node/edge feature access and graph structure manipulation.

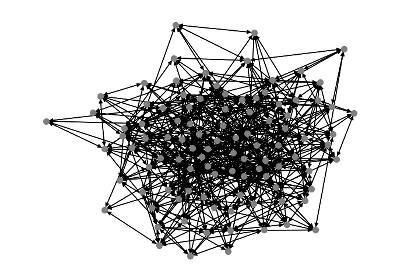

- Good scalability to graphs with tens of millions of vertices.

To begin with, we have prototyped 10 models across various domains: semi-supervised learning on graphs (with potentially billions of nodes/edges), generative models on graphs, (previously) difficult-to-parallelize tree-based models like TreeLSTM, etc. We also implement some conventional models in DGL from a new graphical perspective yielding simplicity.

Relationship of DGL to other frameworks¶

DGL is designed to be compatible and agnostic to the existing tensor frameworks. It provides a backend adapter interface that allows easy porting to other tensor-based, autograd-enabled frameworks. Currently, our prototype works with MXNet/Gluon and PyTorch.

Get Started¶

Follow the instructions to install DGL. The DGL at a glance is the most common place to get started with. Each tutorial is accompanied with a runnable python script and jupyter notebook that can be downloaded.

Learning DGL through examples¶

The model tutorials are categorized based on the way they utilize DGL APIs.

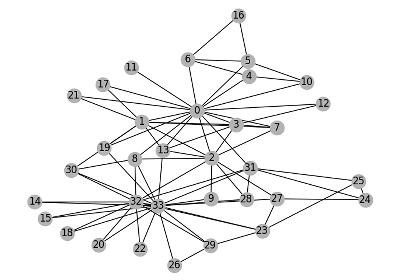

- Graph Neural Network and its variant: Learn how to use DGL to train popular GNN models on one input graph.

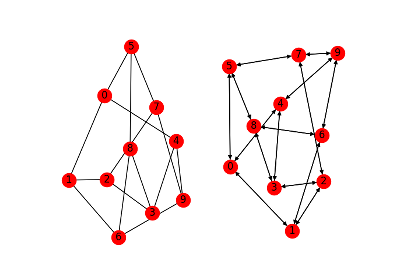

- Dealing with many small graphs: Learn how to batch many graph samples for max efficiency.

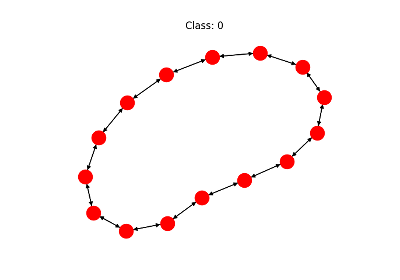

- Generative models: Learn how to deal with dynamically-changing graphs.

- Old (new) wines in new bottle: Learn how to combine DGL with tensor-based DGL framework in a flexible way. Explore new perspective on traditional models by graphs.

Or go through all of them here.

API Reference

- API Reference

- DGLGraph – Graph with node/edge features

- Feature Initializer

- BatchedDGLGraph – Enable batched graph operations

- Builtin functions

- Graph Traversal

- Message Propagation

- User-defined function related data structures

- Graph samplers

- Dataset

- Transform – Graph Transformation

- dgl.nn

- DGLSubGraph – Class for subgraph data structure

Free software¶

DGL is free software; you can redistribute it and/or modify it under the terms of the Apache License 2.0. We welcome contributions. Join us on GitHub and check out our contribution guidelines.

History¶

Prototype of DGL started in early Spring, 2018, at NYU Shanghai by Prof. Zheng Zhang and Quan Gan. Serious development began when Minjie, Lingfan and Prof. Jinyang Li from NYU’s system group joined, flanked by a team of student volunteers at NYU Shanghai, Fudan and other universities (Yu, Zihao, Murphy, Allen, Qipeng, Qi, Hao), as well as early adopters at the CILVR lab (Jake Zhao). Development accelerated when AWS MXNet Science team joined force, with Da Zheng, Alex Smola, Haibin Lin, Chao Ma and a number of others. For full credit, see here.