🆕 Stochastic Training of GNNs with GraphBolt

GraphBolt is a data loading framework for GNN with high flexibility and scalability. It is built on top of DGL and PyTorch.

This tutorial introduces how to enable stochastic training of GNNs with GraphBolt.

Overview

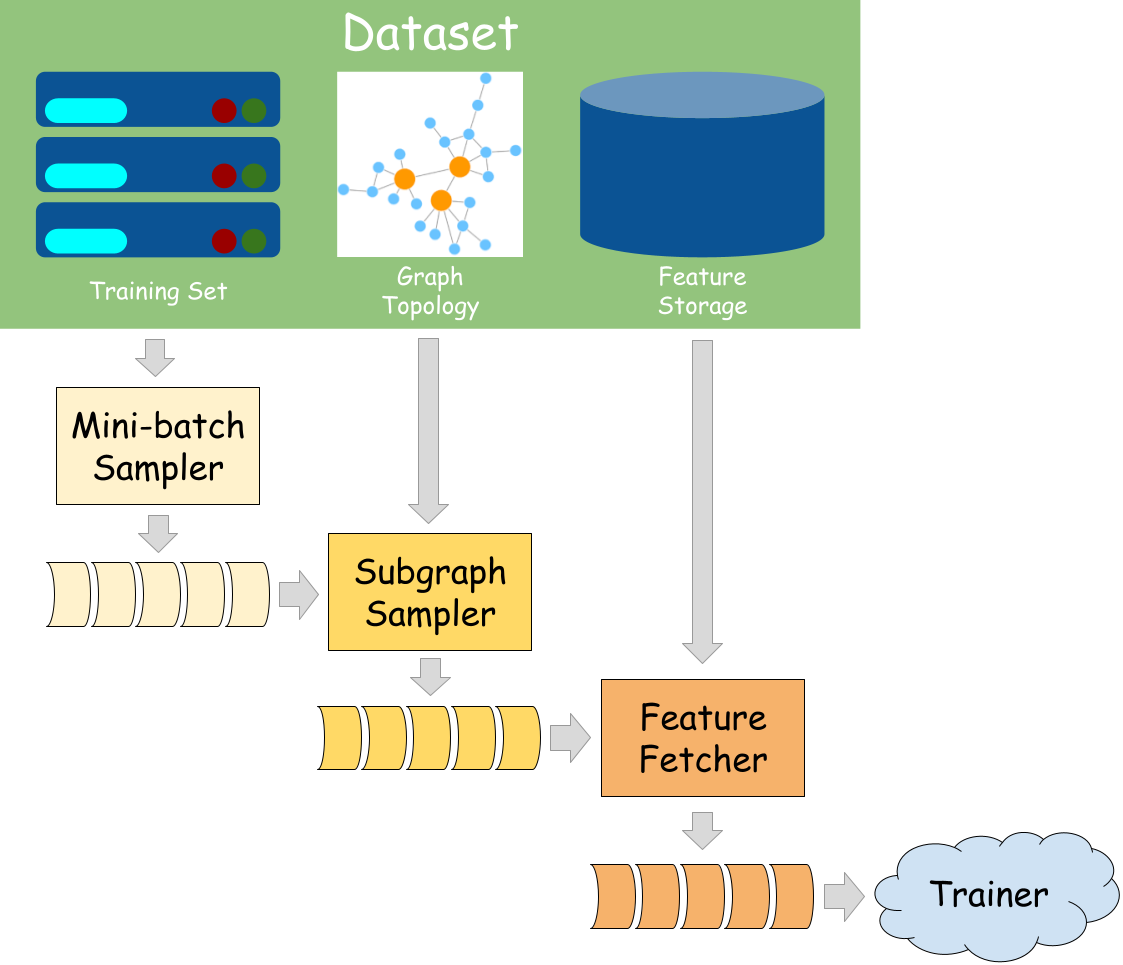

GraphBolt integrates seamlessly with the PyTorch datapipe, relying on the unified “MiniBatch” data structure to connect processing stages. It streamlines data loading and preprocessing for GNN training, validation, and testing. By default, GraphBolt provides a collection of built-in datasets and exceptionally efficient implementations of datapipes for common scenarios, which can be summarized as follows:

Item Sampler: Randomly selects a subset (nodes, edges, graphs) from the entire training set as an initial mini-batch for downstream computation.

Negative Sampler: Specially designed for link prediction tasks, it generates non-existing edges as negative examples for training.

Subgraph Sampler: Generates subgraphs based on the input nodes/edges for computation.

Feature Fetcher: Fetches related node/edge features from the dataset for the given input.

By exposing the entire data loading process as a pipeline, GraphBolt provides significant flexibility and customization opportunities. Users can easily substitute any stage with their own implementations. Additionally, users can benefit from the optimized scheduling strategy for datapipes, even with customized stages.

In summary, GraphBolt offers the following benefits:

A flexible, pipelined framework for GNN data loading and preprocessing.

Highly efficient canonical implementations.

Efficient scheduling.